反向传播算法backpropagation

反向传播算法是用来求代价函数C对权重w和偏置b的偏导数的,有了这个偏导数,随机梯度下降算法才可以应用在深度神经网络的学习中。偏导数其实就代表改变本身对代价函数的影响的大小。

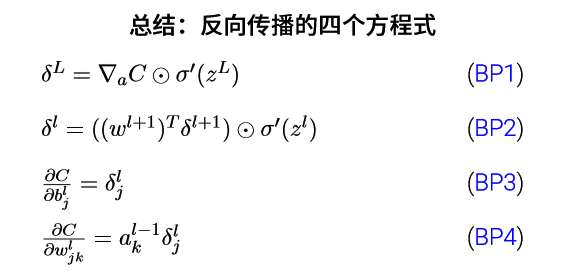

𝜹是代价函数C对节点的偏导数,计算出𝜹后,就可以根据3,4式来计算代价函数C对权重和偏置的偏导数。而𝜹是通过从后向前反向一步一步迭代计算的(公式2),所以,要首先计算输出层没一个节点的𝜹(公式1)。

a是激活值,𝝈是激励函数,w是l层的权重矩阵,b是偏置向量。其中,中间量z是l层的带权输入

在算式BP1中,∇C是代价函数对激活值a的偏导数,可以看到代价函数对激活值a的改变速度。当代价函数C为二次代价函数时,

∇C就是:a-y, 此时BP1就成为:

𝝈'(z)是𝝈对z的导数,表示z处激活函数的变化速率。

实现方法:

def sigmoid(z):

return 1.0/(1.0+np.exp(-z))

def sigmoid_prime(z):

return sigmoid(z)*(1-sigmoid(z))

def delta(z, a, y):

return (a-y) * sigmoid_prime(z)

def backprop(self, x, y):

使用反向传播算法计算𝛁b 和𝛁w

反向传播算法是用来求代价函数C对权重w和偏置b的偏导数的,有了这个偏导数,随机梯度下降算法才可以应用在深度神经网络的学习中。偏导数其实就代表改变本身对代价函数的影响的大小。

𝜹是代价函数C对节点的偏导数,计算出𝜹后,就可以根据3,4式来计算代价函数C对权重和偏置的偏导数。而𝜹是通过从后向前反向一步一步迭代计算的(公式2),所以,要首先计算输出层没一个节点的𝜹(公式1)。

a是激活值,𝝈是激励函数,w是l层的权重矩阵,b是偏置向量。其中,中间量z是l层的带权输入

在算式BP1中,∇C是代价函数对激活值a的偏导数,可以看到代价函数对激活值a的改变速度。当代价函数C为二次代价函数时,

∇C就是:a-y, 此时BP1就成为:

𝝈'(z)是𝝈对z的导数,表示z处激活函数的变化速率。

实现方法:

def sigmoid(z):

return 1.0/(1.0+np.exp(-z))

def sigmoid_prime(z):

return sigmoid(z)*(1-sigmoid(z))

def delta(z, a, y):

return (a-y) * sigmoid_prime(z)

def backprop(self, x, y):

使用反向传播算法计算𝛁b 和𝛁w

| """Return a tuple ``(nabla_b, nabla_w)`` representing the | |

| gradient for the cost function C_x. ``nabla_b`` and | |

| ``nabla_w`` are layer-by-layer lists of numpy arrays, similar | |

| to ``self.biases`` and ``self.weights``.""" | |

| nabla_b = [np.zeros(b.shape) for b in self.biases] | |

| nabla_w = [np.zeros(w.shape) for w in self.weights] | |

| # feedforward | |

| activation = x #用来保存激活值 | |

| activations = [x] # list to store all the activations, layer by layer #用来保存带权输入 | |

| zs = [] # list to store all the z vectors, layer by layer | |

| for b, w in zip(self.biases, self.weights): | |

| | z = np.dot(w, activation)+b #计算带权输入z |

| zs.append(z) | |

| activation = sigmoid(z) #计算激活值 | |

| activations.append(activation) | |

| # backward pass # def delta(z, a, y): # return (a-y) * sigmoid_prime(z) # 计算BP1 | |

| delta = (self.cost).delta(zs[-1], activations[-1], y) # 计算BP3 | |

| nabla_b[-1] = delta #计算BP4 | |

| nabla_w[-1] = np.dot(delta, activations[-2].transpose()) | |

| # Note that the variable l in the loop below is used a little | |

| # differently to the notation in Chapter 2 of the book. Here, | |

| # l = 1 means the last layer of neurons, l = 2 is the | |

| # second-last layer, and so on. It's a renumbering of the | |

| # scheme in the book, used here to take advantage of the fact | |

| # that Python can use negative indices in lists. | |

| for l in xrange(2, self.num_layers): | |

| z = zs[-l] | |

| sp = sigmoid_prime(z) # 计算BP2 | |

| delta = np.dot(self.weights[-l+1].transpose(), delta) * sp # 计算BP3 | |

| nabla_b[-l] = delta # 计算BP4 | |

| nabla_w[-l] = np.dot(delta, activations[-l-1].transpose()) | |

| return (nabla_b, nabla_w) |

No comments:

Post a Comment